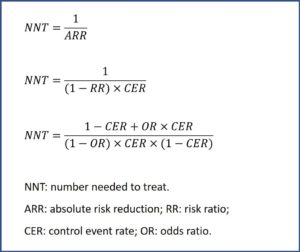

Calculation of NNT in meta-analysis.

Even the greatest have weaknesses. It is a reality that affects even the great NNT, the number needed to treat, without a doubt the king of the measures of absolute impact of the research methodology in clinical trials.

Of course, that is not an irreparable disgrace. We only have to be well aware of its strengths and weaknesses in order to take advantage of the former and try to mitigate and control the latter. And it is that the NNT depends on the baseline risks of the intervention and control groups, which can be inconsistent fellow travelers and be subjected to variation due to several factors.

As we all know, NNT is an absolute measure of effect that is used to estimate the efficacy or safety of an intervention. This parameter, just like a good marriage, is useful in good times and in bad, in sickness and in health.

Thus, on the good side we talk about NNT, which is the number of patients that have to be treated for one to present a result that we consider as good. By the way, on the dark side we have the number needed to harm (NNH), which indicates how many we have to treat in order for one to present an adverse event.

NNT was originally designed to describe the effect of the intervention relative to the control group in clinical trials, but its use was later extended to interpret the results of systematic reviews and meta-analyzes. And this is where the problem may arise since, sometimes, the way to calculate it in trials is generalized for meta-analyzes, which can lead to error.

Calculation of NNT in meta-analysis

The simplest way to obtain the NNT is to calculate the inverse of the absolute risk reduction between the intervention and the control group. The problem is that this form is the one that is most likely to be biased by the presence of factors that can influence the value of the NNT. Although it is the king of absolute measures of impact, it also has its limitations, with various factors influencing its magnitude, not to mention its clinical significance.

One of these factors is the duration of the study follow-up period. This duration can influence the number of events, good or bad ones, that the study participants can present, which makes it incorrect to compare the NNTs of studies with follow-ups of different duration.

Another may be the baseline risk of presenting the event. Let’s think that the term “risk”, from a statistical point of view, does not always imply something bad. We can speak, for example, of risk of cure. If the baseline risk is higher, more events will likely occur and the NNT may be lower. The outcome variable used and the treatment alternative with which we compared the intervention should also be taken into account.

And third, to name a few more of these factors, the direction and size of the effect, the scale of measurement, and the precision of the NNT estimates, their confidence intervals, may influence its value.

Controls event rate

And here the problem arises with systematic reviews and meta-analyzes. Even though we might want to, there will always be some heterogeneity among the primary studies in the review, so these factors we have discussed may differ among studies. At this point, it is easy to understand that the estimation of the global NNT based on the summary measures of risks between the two groups may not be the most suitable, since it is highly influenced by the variations in the baseline control event rate (CER).

For these situations, it is much more advisable to make other more robust estimates of the NNT, the most widely used being those that use other association measures such as the risk ratio (RR) or the odds ratio (OR), which are more robust in the face of variations in CER. In the attached figure I show you the formulas for the calculation of the NNT using the different measures of association and effect.

In any case, we must not lose sight of the recommendation of not to carry out a quantitative synthesis or calculation of summary measures if there is significant heterogeneity among primary studies, since then the global estimates will be unreliable, whatever we do.

But do not think that we have solved the problem. We cannot finish this post without mentioning that these alternative methods for calculating NNT also have their weaknesses. Those have to do with obtaining an overall CER summary value, which also varies among primary studies.

The simplest way would be to divide the sum of events in the control groups of the primary studies by the total number of participants in that group. This is usually possible simply by taking the data from the meta-analysis’ forest plot. However, this method is not recommended, as it completely ignores the variability among studies and possible differences in randomization.

Another more correct way would be to calculate the mean or median of the CER of all the primary studies and, even better, to calculate some weighted measure based on the variability of each study.

And even, if baseline risk variations among studies are very important, an estimate based on the investigator’s knowledge or other studies could be used, as well as using a range of possible CER values and comparing the differences among the different NNTs that could be obtained.

You have to be very careful with the variance weighting methods of the studies, since the CER has the bad habit of not following a normal distribution, but a binomial one. The problem with the binomial distribution is that its variance depends greatly on the mean of the distribution, being maximum in mean values around 0.5.

On the contrary, the variance decreases if the mean is close to 0 or 1, so all the variance-based weighting methods will assign a greater weight to the studies the more their mean separates from 0.5 (remember that CER can range from 0 to 1, like any other probability value). For this reason, it is necessary to carry out a transformation so that the values approach a normal instead of a binomial distribution and thus be able to carry out the weighting.

We’re leaving…

And I think we will leave it here for today. We are not going to go into the methods to transform the CER, such as the double arcsine or the application of mixed generalized linear models, since that is for the most exclusive minds, among which my own’s is not included. Anyway, don’t get stuck with this. I advise you to calculate the NNT using statistical packages or calculators, such as Calcupedev. There are other uses of NNT that we could also comment on and that can be obtained with these tools, as is the case with NNT in survival studies. But that is another story…