Diagnostic accuracy with unbalanced data.

The post analyzes the problem of class imbalance in biomedical models and how overall accuracy can become useless when the minority class is the clinically relevant one. It explains which evaluation metrics are most appropriate and outlines the main strategies to handle imbalance, such as oversampling (SMOTE, ADASYN), selective undersampling (Tomek links), and ensemble methods that stabilize performance in low-prevalence scenarios.

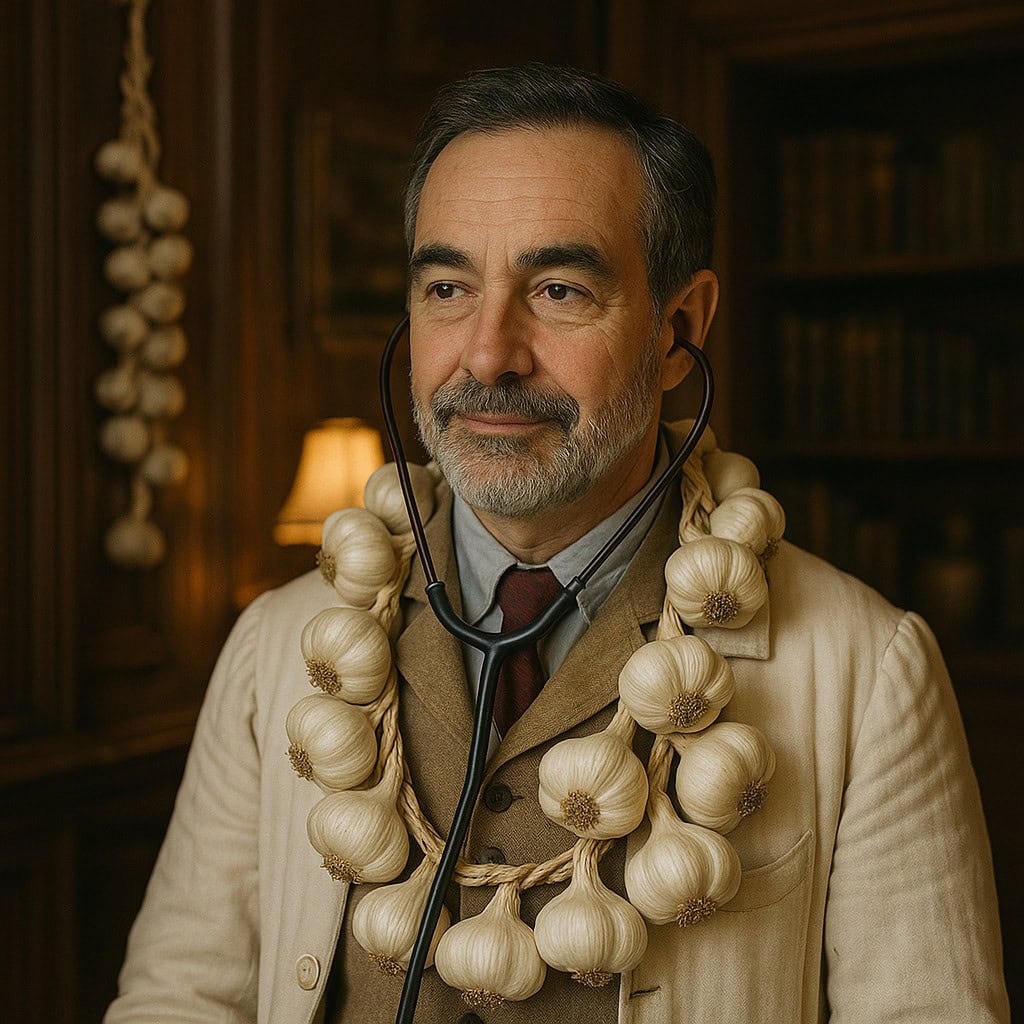

In 1894, a Viennese physician published a study titled “The Prevalence of Hemophagy in Patients with Persistent Pallor.” In it, he claimed to have developed a test capable of detecting vampires with 99% accuracy. The only hitch? Out of his sample of 1,000 patients, only one actually bit necks (and probably for love, not hunger). His test simply responded “not a vampire” every single time. Et voilà! A 99.9% statistical success rate.

The doctor, thrilled, went out to celebrate… at night, of course.

Alright, fine, I admit it, I just made up the story of the doctor who diagnosed vampires. But don’t think it’s too far from reality, because it neatly sums up one of the most common traps in data science and biomedical research: the illusion of accuracy.

In clinical practice, we often find ourselves in situations where the rarest cases are the ones that matter most: an uncommon disease, an unusual surgical complication, a one-in-a-thousand adverse reaction. And right there, where we should be paying the most attention, traditional metrics can play nasty tricks on us if we overlook the rarity of what we’re trying to predict and the imbalance among diagnostic possibilities.

So, in this post, we’re going to talk about how to avoid falling into the trap of unbalanced data. Or, in other words, how not to become the Viennese doctor who diagnosed vampires with flawless accuracy… but never actually found one.

99% diagnostic accuracy… and zero clinical usefulness

Let’s say you design an algorithm to detect a rare disease, something terrifying like our infamous fildulastrosis, which affects just one in every thousand people. You train your model, test it, and it comes back with a glorious 99.9% diagnostic accuracy. You toast, you dance, you publish your findings… until one of this blog’s regular readers asks the awkward question: how many actual sick patients did you detect?

You probably already see where this is going. If the model simply says “healthy” in every case, it’ll be right 99.9% of the time… but it’ll miss the one case that actually matters. In medicine, just like in vampire hunting, false negatives can be costly.

To understand why this happens, all we have to do is take a look at the contingency table (though data scientists prefer the fancier name “confusion matrix”). This 2×2 table splits the model’s hits and misses into four compartments: true positives (TP, the model says “sick” and the patient really is), true negatives (TN, says “healthy” and is right), false positives (FP, says “sick” but the patient’s fine), and false negatives (FN, says “healthy” when the patient’s actually sick).

If we choose accuracy as our go-to metric to assess model performance, we’re only looking at the total number of correct predictions. And if the number of healthy cases vastly outnumbers the sick ones, then the errors in the cases we care about get totally diluted. It’s like applauding a doctor for curing 99% of colds… while missing every single heart attack.

So, is there a way to dodge this trap when there’s a major imbalance between diagnostic possibilities? As usual, yes, there is.

The simplest fix is to carefully choose the most suitable metric to evaluate your model or diagnostic test. Clinicians are used to sensitivity, specificity, predictive values, likelihood ratios, ROC curves, and so on. But in machine learning, the three most popular metrics are:

– Sensitivity: of all the truly sick patients, what proportion did the model detect? This is also known as recall.

– Precision: of all the patients the model flagged as sick, what proportion actually are? In Biomedicine, precision is usually known as positive predictive value.

– F1-score: this is the harmonic mean of the two above, which gives a balanced picture between them.

Which one should you go with? As a general rule, if your data is well-balanced, you can use accuracy without issue. But if there’s a big imbalance, it’s better to rely on the three metrics we just described. In any case, the model’s overall performance across all diagnostic thresholds is best reflected by the area under the ROC curve.

Of course, all of this only covers the statistical side of things. The clinical context in which the model is applied might make one metric more appropriate than another.

Either way, everything we’ve said so far will help us better understand the usefulness of a diagnostic model or test. But we might also want to explore techniques to actually improve the model’s learning during training.

So, do you think we can train a model better when the target diagnosis categories are highly imbalanced? Keep reading and find out.

Creating synthetic patients (but ethically)

Once we realize we’re short on positive cases (just like with vampires or fildulastrosis), the logical next step is to balance the dataset so algorithms can actually learn to diagnose the rare cases. But what if reality doesn’t give us enough examples? Easy: we make them up, following a few well-defined rules, of course.

This is where oversampling techniques come into play. The idea is to artificially increase the number of examples in the minority class.

The simplest approach would be to just duplicate the rare cases, but that’s like showing a med student the same chest X-ray a hundred times: in the end, he’ll memorize the image but won’t learn to diagnose. The student ends up “overfitting” and will totally flop when faced with a new case.

Luckily, there’s a more creative method: SMOTE (Synthetic Minority Oversampling Technique). SMOTE doesn’t copy, it generates new synthetic examples based on the ones we already have.

Imagine each sick patient as a point in a multidimensional space where each axis represents a variable (age, blood pressure, hemoglobin level, etc.). SMOTE picks one of these points and looks for its k nearest neighbours in the same class (other sick patients). Then, it randomly chooses one of those neighbours and creates a new patient somewhere in between, combining their values. The result is a fake (but plausible) patient, expanding the minority class without just repeating cases.

What’s cool about SMOTE is that it doesn’t make up nonsense; it explores the in-between zones of the data space, where real cases could exist but just haven’t been observed yet. Going back to our med student metaphor, it’s like using patient simulators: they’re not real, but they reflect the clinical world with decent accuracy.

So SMOTE is like that diligent student who takes notes to fill in the gaps in their notebook. But there’s another type of student, pickier and a bit rebellious, who zeroes in on the stuff they don’t understand. That’s ADASYN (Adaptive Synthetic Sampling), which doesn’t just generate new examples randomly, but focuses on the parts the model finds hardest to learn.

ADASYN’s logic is simple but powerful. Let’s say we’re training a model to detect fildulastrosis, and we notice it struggles more with certain clinical profiles; for instance, young women with atypical symptoms, or elderly patients with multiple conditions.

ADASYN automatically identifies those “trouble spots” in the data space, areas where the model frequently makes mistakes, and generates more synthetic examples exactly there. So rather than filling in gaps blindly, it strengthens the model’s weak spots, helping it to learn how to diagnose the very patients that confuse it most.

Less is more: time to wield the scalpel

So far, we’ve looked at the problem from the perspective of missing sick patients, but there’s another possible approach: maybe we just have too many healthy ones. From this new angle, we can balance the dataset by shrinking the majority class using the opposite technique: undersampling.

This approach comes with two clear advantages. First, the model learns faster because it has fewer data points to process. Second, it’s forced to pay more attention to the rare cases. But there’s an obvious risk here: we might accidentally delete useful examples, data that carried important signals. That’s why we need to pick a fine scalpel for the trimming and resist the urge to bring out the chainsaw.

To avoid slicing at random, a few clever methods have been developed, like Tomek links, a concept introduced even before machine learning became trendy. The idea, simple and elegant, is to find pairs of examples that are suspiciously close to each other but belong to different classes. In other words, cases that confuse the model because they sit right on the borderline between “normal” and “pathological.”

Typically, the example from the majority class is the one that gets removed, making the boundary between the two classes a bit clearer. Sticking with the medical metaphors I’ve grown so fond of in this post, it’s like surgically removing healthy tissue that’s interfering with the view of a tumor during a diagnostic operation: painful, but necessary for the model to see more clearly.

The perks of teamwork

Once we’ve “fixed” our data and got everything nicely balanced, it’s time to build a model that’s solid and effective. And just like in many day-to-day tasks, teamwork can make all the difference.

So-called ensemble methods combine several simpler models (like multiple decision trees) to produce a final result that’s more stable and accurate. Think of it like a medical committee: each specialist gives their opinion, and the combined diagnosis is usually better than any one doctor’s alone.

We’ve already talked about these methods in more detail in a previous post, but here’s the quick version: there are two main approaches.

The first is to build lots of trees using techniques like bagging (bootstrap aggregating) or random forests. Once we’ve built them, we average their outputs to get our prediction.

The second approach, also using decision trees, is to apply boosting techniques, where each tree is trained one after another to improve on the mistakes of the previous ones.

You can go back to that earlier post if you want to refresh your memory.

One of the great things about decision trees is that they come with a few tricks of their own, beyond what we’ve discussed so far. Random forests, for instance, can be built using stratified sampling, which ensures each sample maintains a representative or balanced class distribution.

Plus, many of these algorithms allow us to assign higher weights to the minority classes during training. This tweaks the loss function or the node-splitting criteria so that mistakes involving minority classes are penalized more.

And if that weren’t enough, boosting algorithms (like AdaBoost, Gradient Boosting, XGBoost, LightGBM, etc.) can dynamically adjust the weights of observations based on errors. This means that examples from minority classes (the harder ones to classify) get more weight in later iterations. The model ends up learning more from the mistakes it made on those underrepresented cases.

And don’t forget the trick we mentioned earlier: tweaking the probability threshold used to assign the final class (instead of sticking with the default 0.5). This lets us fine-tune our metrics and better adapt them to the clinical context.

We’re leaving…

And that’s where we’ll leave things for today.

We’ve seen that dealing with imbalanced data is a bit like practicing medicine in a hospital full of rare cases: it takes balance, the right metrics, and a healthy dose of skepticism.

Bragging about 99% accuracy isn’t enough. You need to know what your model is getting right, and what it’s completely missing. Otherwise, you could end up publishing the definitive study on vampires… without ever having seen one in your whole career. So, remember: accuracy can be as seductive, and as misleading as Dracula’s fangs.

We’ve also looked at some of the more sophisticated tricks up our sleeve to rebalance diagnostic categories when faced with a serious imbalance. Still, in many cases, adjusting the diagnostic threshold and picking the right metric can go a long way.

In data science, there are a few go-to metrics that we in the biomedical world aren’t as used to, like the F1-score. Sure, it’s handy to wrap sensitivity and positive predictive value into a single number. But if you think about it, the F1-score has one big blind spot that could really matter to us: it completely ignores true negatives.

Only paying attention to the correctly classified healthy cases can be risky, especially if you’re working on a cancer screening program or diagnosing a very rare disease, just to name a few. In these scenarios, true negatives really do matter.

Luckily, there’s another metric that takes all four cells of the confusion matrix into account and gives you a balanced measure of overall model performance. Its value ranges from -1 (total disaster), to 0 (random guessing), to +1 (perfection).

We’re talking about the Matthews correlation coefficient, a metric that doesn’t get fooled by big numbers or shady datasets. But that’s another story…