Discrete probability distributions

Discrete probability distributions allow us to establish the full possible range of values of an event when it is described with a discrete random variable. Examples of the use of the Bernoulli’s, binomial, geometric, and hypergeometric distributions are shown.

We all will agree how nice it is to be totally exhausted at the end of the day, get into bed and fall asleep in a matter of seconds. I can tell you that, most of the times, the next day I don’t even remember when I go to sleep.

Of course this is not always the case. There are times when the brain insists on continuing to function, sometimes due to concerns of our daily life, but other times it insists on the most useless and absurd thoughts, despite the fact that the rest of the body cries out for disconnection.

For these fateful days (or nights) when you can’t fall asleep, I recommend an infallible trick: counting sheep.

It practically never fails. The secret is to count them without paying much attention to the sheep that are passing over the pillow and not obsessing over the size of the herd that is accumulating under the bed. And, much less, by the type of sheep that we have counted.

And I tell you this last so that you do not make the same mistake that my cousin usually does. The poor guy is an insomniac and, following my advice, he starts counting sheep. The problem is that, since he has a naturalistic vocation, he begins to pay attention to the color of each sheep, whether it has long hair, whether it is of one race or another, and things like that.

But that is only half the story, he begins to calculate the probability that the next sheep will be of one type or another. Do you want to know how he does it? Keep reading and you will see.

Probability distributions

We already know that probability measures the degree of uncertainty or possibility of occurrence of each of the possible results of an event. This is usually calculated in the simplest cases by dividing the number of favorable events by the number of possible events.

For example, if we roll a six-sided die, the probability of getting a two will be 1/6. Thus, the probability can have a value from 0 (it is impossible for the event to occur) to 1 (it is certain that the event will occur).

But not always calculating the probability of an event is so simple. For more complex calculations, it is usual to resort to the probability distribution that follows the event in the population.

The probability distribution allows establishing the entire possible range of values of the event, which is usually a random variable, so it allows establishing the probability that the event occurs when performing a given experiment.

Discrete probability distributions

We already know that random variables can be continuous or discrete. Similarly, probability distributions are also continuous or discrete, depending on the type of variable for which they explain their distribution.

The best known of these probability distributions is undoubtedly the normal distribution, which is a continuous probability distribution.

However, my cousin, for his speculations with sheeps, uses discrete probability distributions, which are what we have to resort to when we want to count objects. This is so because discrete random variables are those that describe variables with integer values, with either a list of positive and/or negative values.

There are numerous discrete probability distributions, but here we are going to focus on my cousin’s favorites and see how he uses them. These are the Bernoulli’s distribution, the binomial distribution, the geometric distribution, and the hypergeometric distribution.

Bernoulli’s distribution

Bernoulli’s distribution, also called dichotomous distribution, is used to represent a discrete random variable that can only have two mutually exclusive outcomes. Furthermore, we can only apply this distribution when we perform a single experiment. If we do several, we will have to resort to the binomial distribution, which we will see later.

These two outcomes of a Bernoulli’s experiment are often called success and failure. Here it is important to note that “failure” does not mean the opposite of success, but any other result than success.

For example, if we define a Bernoulli’s experiment by rolling a die and considering success to roll a 6, a failure will be to roll 1, 2, 3, 4, or 5.

Like all probability distributions, we can define the Bernoulli’s distribution in terms of some of its parameters. Thus, if the probability of success is “p” and the probability of failure is “1-p”, the distribution can be defined by its mean (p) and its variance (p(1-p)).

This distribution is the simplest. Suppose that among the sheeps that my cousin counts there are 25% churras, 30% merinos, 15% carranza sheep and the rest, manchega sheep (these are different breeds of Hispanic sheep).

To find out what the probability is that the next sheep to cross your pillow is a carranza, you just have to apply Laplace’s law of favorable cases divided by possible cases. In this case, 15/100 = 0.15.

Binomial distribution

Things get a little complicated for my cousin if he wants to calculate probabilities with more than one sheep, since then Bernoulli’s experiments are useless. For example, let’s imagine that he wants to know how likely he is to count 3 churras among the first 15 sheep.

For such a case, my cousin uses the binomial distribution. It is similar to Bernoulli’s, but it tells us the probability of obtaining a result between two possible ones when carrying out a n number of experiments.

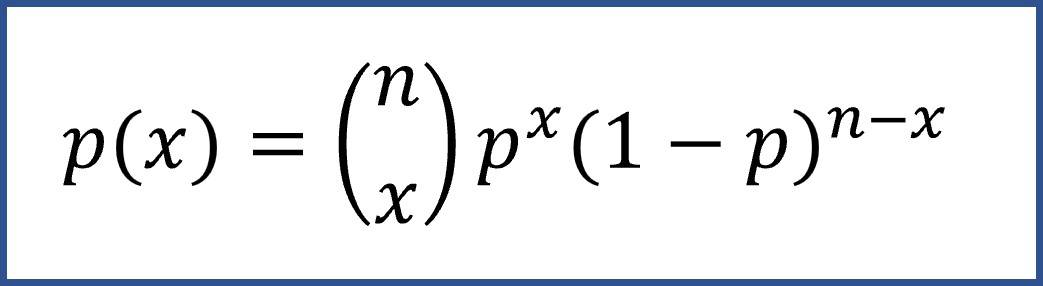

For those who love formulas, where p is the probability of success, the general formula to calculate the binomial probability is the following:

As we can see from the formula, to characterize a binomial probability distribution we only need the number of experiments (n) and the probability of success (p). Its mean can be calculated as np and its variance as np(1-p).

Let’s go back to my cousin’s problem. He wants to know the probability that a minimum of 3 churras will pass among the first 15 sheep. In this case, n = 15 and p = 0.25 (25% of the sheep are churra). To know the value of the probability, we just need to substitute the values in the previous formula and solve it, but we are going to ask to R program to do it for us:

1- pbinom(3, 15, 0.25)

R tells us that the probability is 0.54. If we want to know the probability that less than 3 will pass, we calculate the complement of the current value: 1 – 0.54 = 0.46. We can also ask R:

pbinom(3, 15, 0.25)

Geometric distribution

Being awake at three in the morning, my cousin begins to ask himself more delirious questions. For example, if carranza sheep are the least frequent (15%), what is the probability that a carranza sheep will not appear until 9 other breeds have passed?

Here neither of the two previous distributions is worth it. To solve this problem we need the geometric distribution, useful for when tests are repeated waiting for a certain event.

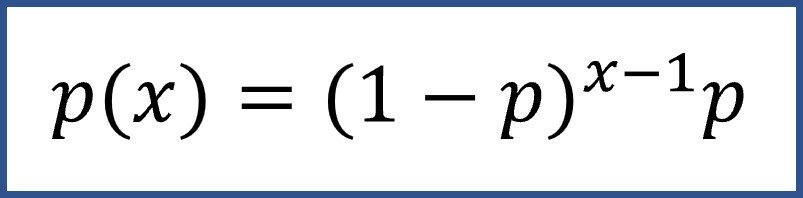

The geometric distribution is a discrete distribution that allows us to calculate the probability that a k number of experiments have to be carried out for an event to occur, where p is the probability of the event.

If we think about it a bit, the probability will be less and less as k increases. It will be less and less likely that we will have to wait for a larger number of experiments to observe success. For this reason the geometric distribution is highly skewed to the right. For those who might be interested, the mean is defined as 1/p and the variance as 1-p/p2.

Let’s solve my cousin’s problem. The probability of seeing a carranza sheep is 0.15, so the probability that it is of another breed is 1 – 0.15 = 0.85. Also, keep in mind that each sheep (each experiment) is independent of the one before or the one after.

Thus, we start by calculating the probability of seeing other breeds in the first 9 attempts: we will multiply the probabilities of each independent event, that is, 0.85^9 = 0.23. We now finish calculating the probability of 9 “non-successes” (another breed) and one success (carranza): 0.23 x 0.15 = 0.03.

If you have understood the previous calculation, you will understand the geometric probability formula, which would have allowed us to do the calculation directly:

But my cousin is not happy with this result and wonders how many sheep have to pass, on average, to see three carranza sheeps. As we have already said, the mean is 1/p, so 3x(1/0.15) = 20: about 20 sheep will have to pass for three to be of carranza breed.

In any case, it is advisable to do the geometric probability calculations with a calculator or a statistical program. We are going to ask R what is the probability that no carranza sheep will appear until the tenth sheep we count:

dgeom(10, 0.15)

R gives us the same value, 0.03. There is a 3% chance that a Carranza sheep won’t show up until the tenth sheep of our sleepless night.

Hypergeometric distribution

And we are going to end our sleepless night with the question that anguishes my cousin at about five in the morning: of the 35,000 sheep that are already under his bed, if we choose 100 at random, what is the probability of that, at most, 22 are churra?

For this, we must resort to the last of the distributions that we are going to talk about today: the hypergeometric distribution.

The hypergeometric distribution is a discrete probability distribution useful for those cases where samples are drawn or where we do repeated experiments without replacement of the element we have drawn. So we see that it fits this problem.

If we start from a population of N elements, N1 will be successes and N2 will be failures (not- successes), taking into account that each element can only belong to one of the two groups. If we obtain a sample n without replacement (they are not independent extractions), we can calculate the probability of obtaining a given number of successes.

In our example, N = 35,000. The number of successes (being churra) will be 35,000×0.25 (25% of the sheep are churra), that is, 8,750 churra sheep. We would use R to calculate the probability that there are a maximum of 22 churra in our sample of 100 sheep:

phyper(22, 8750, 35000-8750, 100)

The result is 0.28: there is a 28% probability that, if we randomly draw 100 sheep, there will be at least 22 churra in the sample.

In summary

As we can see, the four probability distributions that we have used are useful discrete distributions for when we do counts since the random variable is a discrete one.

When do we use each of them? When they are unique experiments with a dichotomous result, the choice is simple: Bernoulli’s distribution. When dealing with multiple independent experiments and we want to know the probability of a given number of successes, we will use the binomial probability.

Other times we will want to know the number of experiments that we will have to carry out to be successful: our tool in these cases will be the geometric distribution. Finally, when we make extractions without replacement of a sample and we want to know the probability of obtaining one type of element or another, we will need to resort to the hypergeometric probability distribution which, for those who do not know, is the one behind the Fisher’s exact test.

We are leaving…

And here we will leave it for today. Don’t think my cousin falls asleep after the last problem is solved.

Nothing is further from reality.

In his stubborn insomnia, when the sun begins to peek over the horizon, my cousin put he tin lid on his problems with the sheep and wonders how many of them he will have to count for the tenth manchega sheep to enter his herd. For this he needs to use a distribution that we have not talked about today and that we will return to another time: the negative binomial distribution. But that is another story…